Definitely, Maybe - Here Comes the Probability Processor

Artificial Intelligence in the form of a new computer chip that saves tonnes of space and operates on low power could revolutionise computers in the near future

A computer chip is no different from Mr. Spock in Star Trek. Ask it a straight question and you’ll get a straight answer: A “yes” or a “no”. Now ask it a question that doesn’t have a yes or a no but just a “perhaps” or a “maybe” as an answer and the chip will have its mundu in a twist.

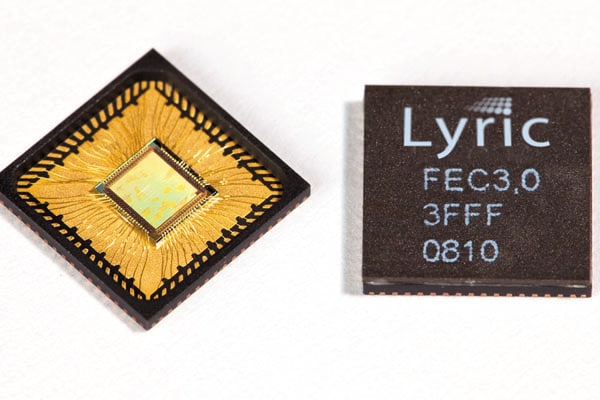

Ben Vigoda isn’t having any of this anymore. He is an MIT scientist who’s been working to create a new type of chip for close to a decade now. On August 17, Lyric Semiconductor, a start-up that he co-founded in 2006, unveiled what it called the alternative to digital computing — a “probability processor” that can compute “quite often” or “very rarely” instead of just “yes” or “no”. Lyric claims its “LEC”, a specialised processor for flash memory, offers a 30-times reduction in size and a 12-times improvement in power consumption compared to its digital counterparts today, without sacrificing performance.

Vigoda believes that for many tasks in today’s world his processors can do a much better job than the stuff that Intel or AMD make. And before you jump, Lyric’s chips won’t be replacing Intel’s Core or Xeon or AMD’s Opteron or Phenom, but assisting them. In that sense Lyric’s processors will be like the graphics or audio-processing chips that assist Intel or AMD’s chips draw that 3D image or play that MP3 song faster and better.

To understand why this is important, go back 25 years when graphics software programmes like Microsoft Paint first became commercially available.

The calculation-intensive work of generating the drawing was done, albeit a slow pace, by the main chip. As consumers demanded that these drawings be rendered faster on the screen, all the “calculation logic” was put on a hardware platform, the video graphics adapter chip. And that’s how the main chip got a graphics assistant.

Dealing with Ifs and Buts

Similarly, Lyric’s processor is a proof that many computer applications today need to deal with scenarios rather than conclusive events. And these scenarios keep changing as time goes by. Consider your email for instance. Was that email titled “Please collect your free gift” just spam or a mail from your friend? Take another example: Movies. Presumably you have subscribed to one of those rent-a-DVD services. Now, what movie are you likely to order next from the online rental service?

All these require calculations based on probability and therefore nasty mathematics and logic. Such calculations require data about past behaviour, choices being made currently and the likelihood that current choice will be consistent with past behaviour. For instance, it is quite possible that if you order Aamir Khan’s 3 Idiots on a rental Web site it will suggest Lagaan next, which is another Aamir Khan film. But you actually end up ordering Dead Poet’s Society. Now the algorithm changes its hypotheses. Maybe you like stories of charismatic teachers and students defying orthodoxy. It suggests Stand and Deliver. You look at the review and end up ordering that. Ah, goes the algorithm. It suggests Finding Forrester next. And the chain continues till you order Ghajini, which is when the algorithm goes into a spin again.

Today more and more computer applications need to deal with such logic which requires a change in prediction based on a change in behaviour. Some might be predicting traffic routes, others may be suggesting books. “Probabilistic programs are better for tasks that involve uncertainty, including where some information is uncertain or unknown. They can also reason in a way that is much more natural to humans, for instance: ‘I’ve observed the outputs of this program, can I now reason what its inputs might have been?’ That is how human reasoning works, for example when we look at an email’s subject and sender and reason if it was sent by a spammer,” says John Winn, a researcher with Microsoft Research in Cambridge, UK.

Since more people depend on such calculations and they need it fast, the time has come for this logic to migrate to a hardware platform, just like the graphics processor. So Intel and AMD now need a “probability calculator” assistant.

Reading Between the Lines

This makes economic sense as well. To do just basic probability calculations, a conventional microprocessor ends up using almost 500 “transistors”, which are the fundamental building blocks of a chip. Lyric’s chip uses just a fraction of this amount. This means a smaller chip size and less power consumption. That’s a real help for the main microchip. Intel’s Pentium 4 consumed between 60-80 watts as compared with Pentium III that consumed 30-40 watts.

So how does Lyric manage to reduce the circuitry it needs? Its circuits use the electrical signals conventional microprocessors are designed to ignore. For instance, a conventional microprocessor’s circuits will only recognize 1 volt as “1” or a 0 voltage as “0”; “1” and “0” are the basic building blocks of computer calculations. Lyric’s technology uses voltages between 0 and 1 volt to compute probabilities between 0 and 1!

Since the circuits work directly with probabilities, a lot of the extra complexity of digital circuits can be eliminated. This leads to a drastic reduction in the number of transistors and, as a result, circuit complexity. Fortunately, the computer science world is ready with its stock of algorithms to aid this migration of probability calculations to the realm of hardware.

“The core of probabilistic computing is algorithms. The reason you’re seeing newer hardware and software in this space is because they can exploit over 30 years of work in algorithms that can solve diverse kinds of problems in very efficient ways,” says Prof. Manindra Agrawal, the head of the computer sciences department at IIT Kanpur.

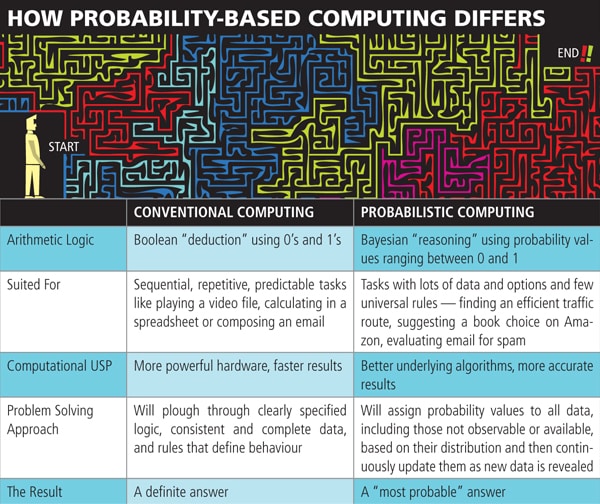

Infographic: Hemal Sheth

These algorithms offer developers and problem solvers alike “a calculus to make good guesses”, says Noah Goodman, a research scientist at MIT’s Computational Cognitive Science Group. The Holy Grail of mathematicians is, however, to develop a “general purpose inference algorithm” that can solve all kinds of problems by looking at any kind of data.

Prof. Agrawal says software programmers are today using these algorithms by explicitly coding them into their programs, but in the future these algorithms might come pre-embedded as software libraries that they can use with just a line or two.

In fact, the algorithm’s journey from research papers into programming tools may have already begun. Winn says Microsoft’s still under-development probabilistic computing framework “Infer.NET” can allow developers to compute probability-based tasks like recommending a new movie based on prior choices in “20 lines of code created in hours which might otherwise takes months of work and over hundreds to thousands of lines.”

Winn says, though Infer.NET is still primarily a research project for Microsoft, it is already being used by researchers and programmers outside the company to solve real world problems. In addition to giving it away free of cost to non-commercial users, Microsoft is also testing it in a limited manner for commercial use.

To be fair, probabilistic computing is still a few years away from becoming widely available. A bulk of the work that is taking place in the field is within universities like MIT and Stanford or research divisions of companies like Microsoft and Google.

Researchers in the field of Artificial Intelligence — getting computers to simulate the human brain in their working — are also increasingly looking at probabilistic computing as a better foundation for their pursuits than rules-based learning (the first phase of AI) or statistical machine learning (the second phase).

“AI’s ultimate goal was to mimic human behaviour. And humans have an in-built ability for probability processing.

We never think in binary,” says Prof. Agrawal.

“Probability is bringing us into the next generation of AI and much more powerful computers that can handle data that is orders of magnitude more complex,” says Winn.

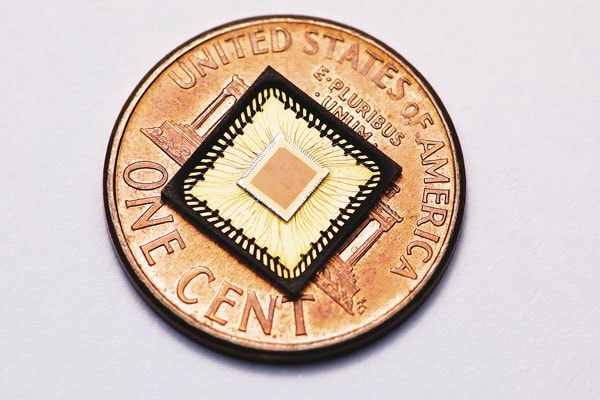

Small Wonder The Lyric LEC against a penny

What’s clearly taking place now are developments across the entire probabilistic computing landscape, ranging from high-level languages to compilers to low-level languages and dedicated hardware. These include “Church”, a probabilistic programming language developed by Goodman and his research colleagues at MIT; Microsoft’s development framework Infer.NET; new programming language “CSoft” and Lyric Semiconductor’s “GP5” multipurpose probability processor.

As each of these links mature, the entire chain will fall into place, eventually turning probabilistic computing into a mainstream concept that will be found inside all kinds of computers and electronic devices. Operating on probabilities natively across the chain, from the programming language right down to the processor’s instruction code, “could significantly speed up the computation process because you no longer have to use software techniques to simulate probability on hardware that was deterministic,” says Prof. Agrawal. Vigoda says his GP5 general purpose probability processor when it comes out in 2013 might be 1,000 times more powerful than digital processors.

Could probabilistic computing then overthrow its predominant deterministic counterpart, and become the mainstream?

Unlikely, says Vigoda. He feels probability processors will more likely complement today’s CPUs by taking on the workload where probabilities are involved while leaving the “1+1=2” type of conventional operations for the CPU. “In most PCs there is already a CPU [main processor] and a GPU [graphics processor], so probability processors might become another.”

MIT’s Goodman agrees that in the short term probability processors might only be seen as a new class of processors, because it’ll need to fight decades of entrenched experience in making conventional CPUs work really well. “But down the road it’s pretty likely that CPUs will become probabilistic, because the set of things you want your computer to do with each passing day can only benefit from probability. And when these things get good, we’re going to see a lot more of them much faster than we saw GPUs.”

(This story appears in the 30 November, -0001 issue of Forbes India. To visit our Archives, click here.)