We must keep our hands on the (AI regulation) steering wheel: Abhinav & Raghav Aggarwal

AI holds immense potential for positive change, but it's not without its risks. The objective of regulation should be to foster democratisation of this dynamic tech

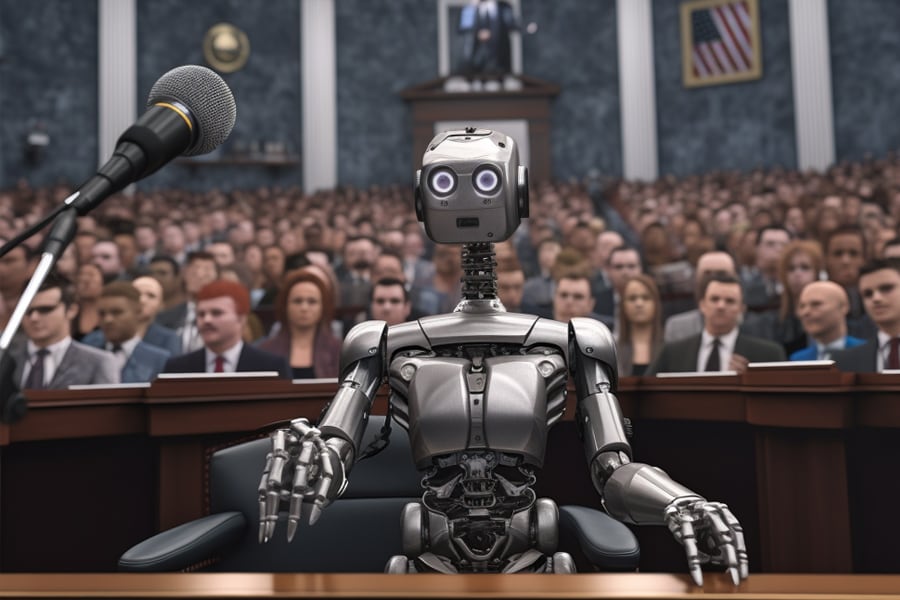

This image has been generated by Fluid AI GPT

This image has been generated by Fluid AI GPT

In these wild times, where every dinner table conversation is likely to veer into a spirited debate about AI (artificial intelligence) regulation and whether we'll be waking up to a real-life Terminator sequel, one thing is crystal clear—we've got an epic challenge on our hands. When thinking about all of this, the biggest problem that comes up is that no two people have the same definition and underlying understanding of what AI actually is today and how it works. Don’t believe me, ask everyone in your house this question and you’ll soon agree with me. So, the first key step is to define it and get everyone on the same page. AI is a combination of software code combined with advanced mathematics that’s capable of improving its output by taking feedback. That’s it! And no, it doesn’t have consciousness or independent thought (yet!), the things that make us human.

So, think of AI like a big ol' collection of math formulas, designed to get a job done based on what we humans tell it to do. That job could be anything from making a self-driving car safe on the road to having a friendly chat.

‘Anti-Hallucination’ Layers

When it comes to regulations, we've got to make sure we keep our hands on the steering wheel of this AI ride. Rather than getting lost in the nuts and bolts of the code, it's about asking, "Who's telling this AI what to do, and why?"

AI is getting more complicated every day. But that's not always a bad thing. Just look at Google's AI that played the Game of Go—it made some wild moves no human could guess, but it sure did win the game. What's crucial is keeping an eye on these AI systems, essentially focusing on AI governance on outcomes rather than procedures used to achieve the outcomes.

And now that we're heading into the world of generative AI, we've got to watch out for AI making stuff up. Regulators have to make sure AI isn't pulling answers out of thin air. At Fluid AI, we’ve seen this first-hand when we ingest an organisation’s data to provide an AI interactive layer on top. We're dealing with this by adding 'Anti-Hallucination' layers—it's our way of keeping our AI honest by providing references for its answers.